Chris Desmond, Evan Lieberman, Anita Alban, and Anna-Mia Ekström

Health and Human Rights 10/2

Published December 2008

Abstract

The aim of this article is to support efforts to hold governments accountable for their commitments to respond to HIV and AIDS. It describes a new approach to ranking countries’ responses in order to facilitate cross-country comparisons. The method uses the United Nations General Assembly Special Session on HIV/AIDS (UNGASS) Declaration of Commitment as its point of departure and was designed to rank countries in terms of their efforts to fight HIV and AIDS. Three indicators of the country response were analyzed: 1) prevention of mother-to-child transmission (PMTCT) coverage; 2) antiretroviral (ARV) coverage; and 3) the ratio of orphans to non-orphans attending school. An assessment of this nature must acknowledge the unique situation of each country, depending on its infrastructure and access to resources. To account for these differences, a regression analysis with contextual control variables was carried out to identify the variation resulting from controllable factors. It is this variation which is used to examine countries’ relative response to HIV as it considers what was actually achieved relative to what was expected given the context. The results highlight the efforts of not only some well-reputed, strong actors but also some unexpected front-runners. The results also point to a group of countries which are lagging behind in all regards. Comparisons between the three indicators show great variations in the focus of countries’ efforts. Rating countries’ relative response to HIV highlights countries that do well in spite of difficult circumstances. The article argues that these “relative overachievers” should be examined more closely so that lessons may be learnt from their efforts. The rating also draws attention to countries where the response is comparatively weak, and where governments, as lead actors in the AIDS partnership, bear the greatest responsibility.

Introduction

This article presents an approach to measuring the different degrees of effort given to countering the HIV and AIDS epidemic so as to rank country responses accordingly. Ranking has become an increasingly popular means of providing cross-country comparisons of universal ideals and aims to focus attention on, and promote accountability for, the outcomes.1 It is the country that is ranked. While service delivery is provided by a range of government and non-government actors across countries, this paper is premised on the assumption that national government leaders should be held accountable for a country’s overall response.

Our main goal is to highlight where countries are setting examples or falling behind. The method and results presented in this paper are part of an ongoing project led by AIDS Accountability International to generate greater levels of accountability for the response to HIV and AIDS. The methods here are intended to provide a tool to support this project. Although we do provide specific country rankings, as discussed in the paper, these results should be viewed as preliminary given some limitations on available data.

Such ranking is a response to a question regarding the scope of response in different countries. In relation to HIV/AIDS, there are two ways in which this question can be asked. The first is: how well are countries responding to HIV and AIDS in an absolute sense? The second is: how well are countries responding given the context in which that response is occurring? It is important to be clear on which is the focus. The first formulation of the underlying question favors (in the sense that they will be ranked well) countries that have relatively small epidemics and the resources to respond. The second formulation concedes that there are a number of contextual factors that make responding more difficult and that are not, at least in the short term, easily controllable. While this paper will consider the former formulation, it will focus on the latter. This is not to suggest that a lower level of response is more acceptable in difficult settings; rather, it avoids the response being interpreted as weak or due to low levels of effort as it would if it occurred in an easier environment.

Root causes which drive differences in HIV and AIDS epidemics, such as the underlying socio-economic and cultural context, vary across and within countries. Long-term structural interventions designed to address these are often country-specific and thus are difficult to compare in a cross-country analysis such as this. We focus, therefore, on comparing the arguably more generic short-term responses which address the immediate impacts of the epidemic rather than the underlying causes. Even this requires an agreed-upon framework within which the comparison can be made. As the framework provides the basis for the ranking, it was important to identify one which carries appropriate authority and international recognition. The United Nations General Assembly Special Session on HIV/AIDS (UNGASS) Declaration was, therefore, used as the basis for agreement on what constitutes the components of a response.

The UNGASS Declaration has eleven components that cover a range of important issues from leadership to resources to prevention.2 We selected components that ultimately indicated actual service delivery and for which data were available for a large number of countries. Previous work considering cross-country comparisons of response has focused on perceptions of preparedness and readiness, such as the National Composite Policy Index and the AIDS Program Effort Index.3 Such work has been informative, but the view was taken that at this stage of the epidemic the responses should be measured in terms of actual delivery. While it would be useful to compare the extent to which leadership efforts or financial resources are correlated with patterns of actual service delivery, ultimately, we have taken the position that governments must be accountable for the latter irrespective of the former.

We evaluate countries in terms of service coverage in three areas: prevention of mother-to-child transmission (PMTCT), antiretroviral (ARV) treatment for those in need, and schooling ratios for orphaned children relative to other children. While these indicators do not cover the full range of relevant AIDS-related services, each is a critical measure of broader policy goals in prevention; care, support, and treatment; and care for children orphaned and made vulnerable by HIV/AIDS. Moreover, the components are sufficiently distinct that if a country performs well on all three, we can conclude that the country is making substantial progress in addressing the epidemic; conversely, if it performs poorly on all three, there would be sufficient grounds for stakeholders to make a country’s political leadership accountable for those shortcomings.

The analysis used to rank countries within this framework uses a dataset compiled from publicly available country-level data provided by various international organizations. The central contribution is to report the performance of countries in facing the HIV/AIDS pandemic using a metric that controls for the structural context in which that response has occurred. That is, rather than simply reporting raw service delivery data in a manner that would suggest that any two countries would be similarly capable of similar results, we incorporate into our analyses factors that governments could not reasonably be expected to control in the short term.

We have not merged the three components into a single measure because this would have required a subjective assessment of the relative importance of each and would lose the objectivity lent by the use of the UNGASS declaration as a benchmark for comparison. The results are reported alongside each other and grouped but never merged or averaged. Nor are the countries placed in rank order with a league table for each component; this would convey an unwarranted sense of confidence in the accuracy of the data and precision of the method. Country results are simply grouped into three categories: those exceeding, meeting, or falling below expectations given the relative responses of countries according to context.

The goal of the larger project, of which this report is a part, is to develop the AIDS Accountability Country Rating.4 The initial intention was to rank all countries, but the indicators identified for use were not available for high-income countries, so these had to be excluded. Ranking high-income countries in terms of both domestic and international response remains an aim, and work in this area is ongoing.

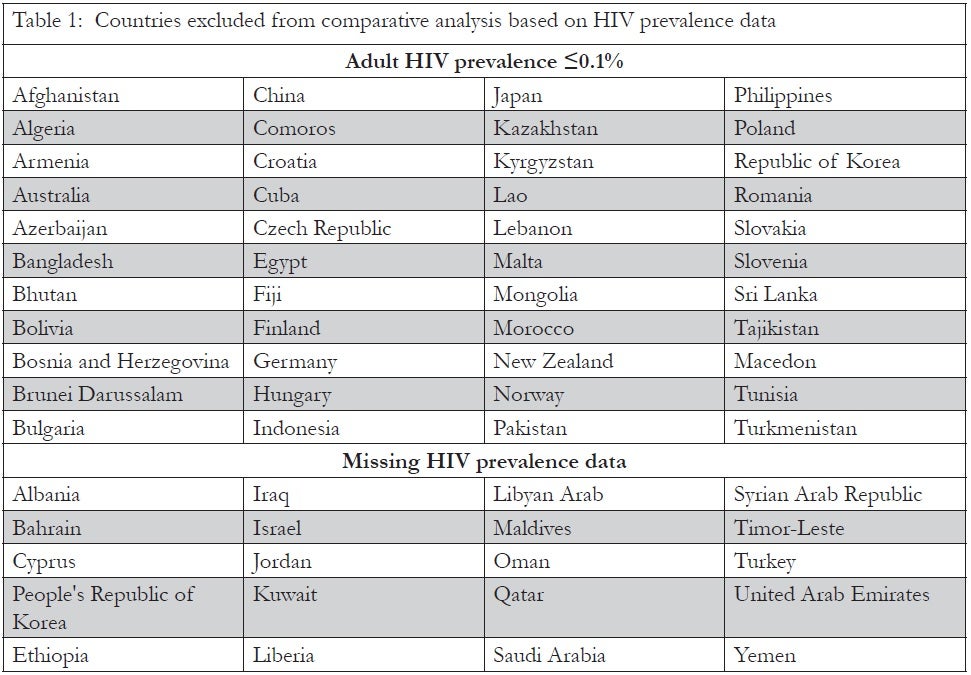

We also exclude from our analysis those countries characterized as very low prevalence (VLP), that have not yet faced major HIV/AIDS epidemics (see Table 1; all prevalence data is from UNAIDS).5 The cutoff was set at ≤0.1% of adults 15–49, as UNAIDS never reports prevalence of 0%. It was not possible to fully determine the extent to which such outcomes are the result of aggressive and effective prevention programs, or other factors, such as the condition of being relatively closed from the rest of the world (e.g. North Korea) that have made the spread of infection less likely. To be certain, the condition of being a VLP country today does not ensure future protection against this deadly epidemic. Nonetheless, given the task at hand, these countries cannot be considered top priorities in a comparative context; in any case, the indicators used here would mean little in these contexts. Our assessment of these cases is largely ambivalent, but none can be considered sources of grave concern. Thus, we describe them as “low priority.” Within these countries, there may be higher prevalence regions that require greater attention, but such disaggregated data are not widely available across countries, and thus, such assessment is beyond the scope of our analysis.

Most very low prevalence countries are contained within low prevalence regions, which in many respects makes their outcome relatively unremarkable. However, within the two high prevalence regions — sub-Saharan Africa and the Caribbean — two countries, Cuba and the Comoros — are reported as VLP. Cuba must be recognized for its aggressive response to HIV and AIDS, albeit in a sometimes controversial manner. In contrast, UNAIDS has been sharply critical of the Comoros’ HIV response, suggesting that good government policy is less likely to be behind averted infections than other factors, such as geographic isolation.6

For a second group of countries, largely from the Middle East and North Africa but also other regions, there is simply no available data on infection levels. While we have no reason to believe that there are massive epidemics in any of these countries, the lack of data reflects a problematic lack of monitoring for the global epidemic. Governments in these countries ought to be taking steps to monitor infection levels and report them to UNAIDS.

The ranking presented in Table 1 considers delivery and not actual impact on the biological markers of the epidemic’s progression such as HIV incidence and deaths from AIDS (see Table 1 below). Outcomes such as reduced mortality and incidence, which in the end are the primary goals, are determined not only by efforts directed at responding but also by pre-existing practices such as circumcision, socioeconomic conditions, sexual networks and sexual behavior patterns. Such indicators do not provide a common standard against which government responses can be compared and ranked because so much of the variation is beyond their control.

Methods

An exercise of this nature faces inherent difficulties. Indicators are often not consistently measured across countries; unique features of certain countries may generate different performance expectations; and stakeholders are likely to disagree about the importance, inclusion, or exclusion of certain indicators. Notwithstanding these concerns, we view these efforts as an important first step in meaningful comparison. Given the enormous expense and effort associated with gathering these international data on the part of international organizations such as UNAIDS and its country partners, we believe it is imperative that these data begin to be analyzed more systematically despite recognizable shortcomings. The method is presented below in some detail not only to explain the current results but to prompt debate on how to improve on these for future rankings, as the method will be revised and results updated on an ongoing basis. The rating should not be interpreted as a “final word” on the evaluation of any single country. Rating ought rather to be used as a starting point for comparison and investigation alongside other comparative tools.

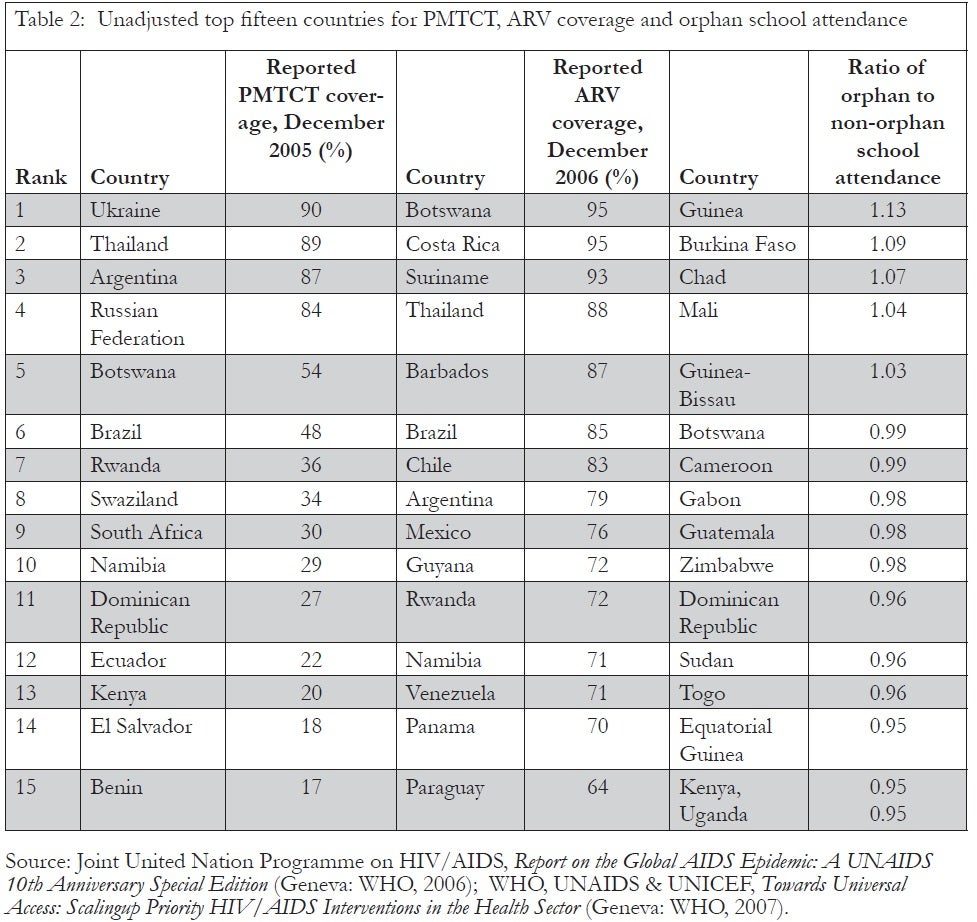

Table 2 reports the top 15 countries using the “raw” data for each indicator selected, drawing attention to the top performers in terms of coverage, unadjusted for context (see Table 2 below). Such ranking does not take into account the differences in the challenges faced and variation in available structures and resources for response; the analysis adjusts for these factors and aims to identify relative priority, as certain responses, while not always at a high level, are indicative of high-level effort, given the environment in which they are occurring.

In order to adjust for context, we regressed each of these three indicators separately against variables that were selected to proxy for the key background factors. Once estimated, the regression was used to generate predicted scores for each country, given their actual values on the control variables. The difference between the predicted estimate and actual value of the indicator was used as the country’s new “score.” Countries could then be ranked from the highest positive to the lowest negative deviation from expected results. As the exact position on the ranking is influenced by data quality and proxy selection, it was decided not to report the rank but rather to group the countries into the top, middle, and bottom thirds, which can be interpreted as countries exceeding, meeting, or falling below expectations.

Our analyses reflect and draw upon other work examining the relationship between indicators such as these and a broader range of factors thought to influence AIDS-related policy outcomes.7 However, these other works seek to explain the full range of cross-country variation and they attempt to identify more proximate social and political factors — such as a country’s regime type (level of democracy) or its degree of ethnic heterogeneity — that might make responses more or less likely. By contrast, we made theoretical decisions about what constituted a background context, and limited this entirely to socioeconomic conditions and health infrastructure. In this manner, the effects of other factors are still reflected in our final assessment of countries.8

Our performance indicators reflect overall country outcomes; they say nothing about the distribution of who provides and who receives these services. A challenge of measurement and accountability is that almost all responses to AIDS include a mix of government and non-government actors, at the sub-national, national, and international levels. The use of country-level data in the analysis of a global pandemic reflects the nature of the international state system, in which national governments enjoy sovereignty, and ultimately hold responsibility for what goes on within their territories.

The following sections detail the substantive importance of the indicator selected and the control variables used in the regression analyses.

Prevention of mother-to-child transmission

A widely proven, inexpensive, and well-accepted strategy for preventing new infections is the provision of antiretroviral drugs to HIV-positive, pregnant women, an intervention that reduces the risk of transmission to the infant. On a country-level, we analyze the “Estimated percentage of HIV-infected pregnant women who received ARV for PMTCT, 2005,” part of the UNGASS reporting mechanism and an agreed-upon measure of response.9 The indicator is self-reported in that national governments provide the data on the level of coverage being achieved in their countries and this is not verified by an independent source. Such self reporting is a strength in that countries are ranked with data their governments provide and cannot easily dispute, but problematic in that data have not been verified; misreporting may occur, and this should be kept in mind when reviewing results.

To be certain, other prevention interventions are critical in the fight against HIV/AIDS, but many tend to be more problematic for making cross-country comparisons. For example, condom distribution or use may be largely determined by pre-existing family planning efforts or cultural patterns of sexuality that would be hard to compare without more sensitive time-varying data. And while education and attempts at sexual behavior change are key components of a response, existing indicators appeared less likely to reflect the efforts of service delivery that are central to the motivation of this analysis.

Unfortunately, our measure of PMTCT coverage has shortcomings. For example, it does not consider quality of care to pregnant women. Although PMTCT may not be as pressing in countries without heterosexual epidemics, the analysis was only conducted with countries that reported on their PMTCT, meaning they regarded it as important enough to have a program.

Table 2 reports unadjusted PMTCT coverage as a benchmark for comparing how country rankings change when performance is measured in a relative context. Theoretically, there are a range of contextual factors which could be argued to be beyond short term control, hindering or helping the delivery of services. Because we were developing a new approach, we were unable to draw heavily on past work to guide the selection of what factors should be included and which variables best proxy for them. These contextual factors may, however, include the following:

HIV prevalence: A country’s inclination to act and the challenge of acting are both related to the scale of the epidemic. It is generally more difficult to provide treatment to larger proportions of the population, although in very low-prevalence countries it may be difficult to locate the need. Also, in high-prevalence countries, HIV is likely to be more of a national priority and international response efforts are also more likely to focus on these countries. Higher-prevalence countries would then be expected to have higher coverage. This impact appears to dominate and HIV prevalence is positively correlated with coverage. This inclusion effectively holds higher prevalence countries to a higher standard, which should be considered when examining the results.

HIV positive population: Intervention for small HIV populations is easier to plan than for large populations, even if the prevalence is low. HIV population data was therefore included from UNAIDS.10

Other health demands: Different health situations mean different levels of demand for other health services. Higher demand for other health services makes responding to HIV and AIDS more difficult. To account for this, the estimated incidence from causes other than AIDS of Disability Adjusted Life Years (DALY) lost per 100,000 of the population was included as a proxy for other health demands.11

Health system access and coverage: While this is to some extent controlled by the state, changes may be difficult to implement in the short term and the focus here is on ranking current decisions. Low levels of access to health services make delivery difficult. To proxy for health system coverage, the variable “Percentage of births attended by skilled health personnel” was used.12 This was favored over variables such as the availability of health service staff, as these indicators do not consider distribution. Furthermore, the selected indicator relates to general infrastructure, as opposed to indicators such as TB treatment coverage, that relate to vertical programs. This indicator has been used in previous cross-country analysis, notably of maternal mortality variations, but it has also been linked to delivery of highly active antiretroviral therapy (HAART).13

Level of urbanization: Reaching rural populations with services, particularly those that involve tests and controlled, uninterrupted supply of drugs, is more difficult than in urban centers. To account for these differences, the “level of urbanization” was introduced as a control.14

GDP per capita: Availability of resources obviously plays a major role in determining the ease with which treatment can be provided. The variable used for this purpose was GDP per capita.15 The data used were from 2004, the year before the indicator selected, as this is when budgeting would likely have occurred. Although an average of the past few years would avoid the impact of short term fluctuations, purchases of drugs and other consumables may be sensitive to short-term fluctuations and so a single year was considered more appropriate. Some measure of national income per person is generally used in cross-country analysis of health service delivery and has previously been linked to HIV services.16

The contextual control variables were selected both because of the authors’ strong prior beliefs that these were the factors most likely to influence the efficacy of any efforts to address the epidemic and because they have appeared as control variables in other quantitative studies of development policy more generally and of AIDS policies specifically.17 It is also worth noting what variables were not adjusted for. Donor efforts were not measured, for example, given our greater concern with the extent of service coverage than the efficiency of the translation from inputs to outputs. Donor support may also result from the efforts of domestic actors, so it is inappropriate to include it as it is a controllable variable. To the extent that it is not domestically controlled the omission is a shortcoming. Finally, regional dummy variables are not included because we see no theoretically compelling reason, for our analysis, why region would exert an independent effect on the possibility for a country’s response beyond the variables for which we already control: HIV prevalence and per capita income. It is true that within regions, certain factors may help to drive responsiveness, such as regional organizations or the diffusion effect of neighborly responses, but it is precisely those types of efforts that we hope to capture in our ranking system. In short, we seek to control for the relative magnitude of the problem and “structural” factors, in order to measure the relative coverage of relevant prevention and treatment services.

ARV treatment coverage

In recent years, no policy intervention has received greater international attention than the imperative to provide antiretroviral drugs to HIV-positive individuals who are at an appropriate clinical stage to benefit from such treatment. We use the most widely discussed measure of drug roll-out, “The estimated antiretroviral therapy coverage, December 2006,” which says nothing about the overall quality of treatment.18 Nonetheless, this is obviously a critical indicator of the scope of a country’s response, and it would be reasonable to conclude that given the specific demands of administering ARVs, treatment coverage reflects more broadly on AIDS-related service coverage.

The control variables used in the treatment component were the same as those used in the previous component: HIV prevalence, HIV population, other health demands, health system access and coverage, level of urbanization and GDP per capita; likewise the motivation for their inclusion.

Ratio of orphan to non-orphan school coverage

A central concern in the global AIDS epidemic is that of children being orphaned in large numbers, often without sufficient care and support. Unfortunately, there exists no widely accepted policy intervention for specifically addressing this problem and, in turn, there are no obvious measurable indicators of service delivery. Nonetheless, we deemed this concern to be sufficiently relevant in a comparative effort to ensure accountability that we included in our analysis a measure of “Ratio of the proportion of orphans (mother and father both dead) aged 10–14 attending school to the proportion of non-orphans (living with at least one parent) aged 10–14 attending school,” recognizing the generalized nature of this problem beyond the AIDS pandemic.19 While some more specific indicators addressing the concerns of orphans and vulnerable children have been identified as part of the UNGASS reporting mechanism, the response rate has been very poor. Given the importance and scope of the problem of children orphaned by the epidemic, we consider the ratio a potentially important measure of the degree to which mechanisms are in place for social protection.

Controls incorporated in the regression include HIV prevalence, HIV population size, level of urbanization, and GDP per capita; the motivations for including these are essentially the same as for the previous components. In addition to these factors, the percentage of children who are orphans is included, as is the total number of orphans, reflecting the absolute scope and logistical challenge of meeting this need.

Absolute or relative differences and data transformations

As already discussed, the central analytic strategy is to regress key indicators of service delivery on background factors that we have deemed to be beyond the control of a government or other stakeholders in the near term, and then to calculate the residual or deviation from average expected values. In these terms, when a country’s service delivery exceeds the predicted value, we label this an overachievement, while failing to reach it is considered as an underachievement. Any analytic approach implies a number of assumptions and choices, and a central concern is the proper use and interpretation of the scale of measures.

First, one could reasonably ask whether a similarly sized residual should be interpreted as a similar magnitude of “under-” or “over-” achievement, regardless of the country’s predicted value. In our approach, if country A was predicted to have 10% coverage on one indicator, but in practice had 20% coverage, while country B was predicted to have 70% coverage, but in practice had 80% coverage, we would treat these two cases identically, as “over-achievers” of 10%. One could certainly object that in country A, the country had doubled its predicted value and that this ought to be interpreted as a “greater” achievement than what was found in country B. Alternatively, one could insist that the much greater overall coverage in B ought to be weighted more heavily. These are valid, and yet subjective concerns that could be addressed in different ways by different analysts. We have opted to weight absolute differences at the lower end of the distribution more heavily. Because the actual distributions of our indicators are heavily skewed to the left/lower end of the scale, we log transform those indicators, which at the very least makes our approach more sensitive to performance differences at the lower-end of the distribution. When log transformed, a given percentage point difference between the actual and the predicted is associated with a larger residual the lower the predicted value. As the residual is used to rank, if two countries have the same absolute difference between predicted and actual delivery, the country which was expected to perform at a lower level will score better relative to expectations than the country expected to have a higher rate of service coverage. The analysis was repeated using absolute differences as part of the sensitivity analysis to show the impact of this adjustment.

In an analogous manner, it was important to transform two of the contextual control variables based on the theoretical principle of diminishing marginal returns and on our observation of exponential distribution of the data. The higher the income of a country, the easier to provide services, but with diminishing effect. To account for these diminishing marginal returns, a log transformation of the GDP per capita variable was carried out prior to its inclusion. The pressure from increasing HIV prevalence is also likely to decrease and so HIV prevalence was also logged. There is little reason to argue the same for the other variables; as a result, they were included in their original form.

Regression estimation and sensitivity analysis

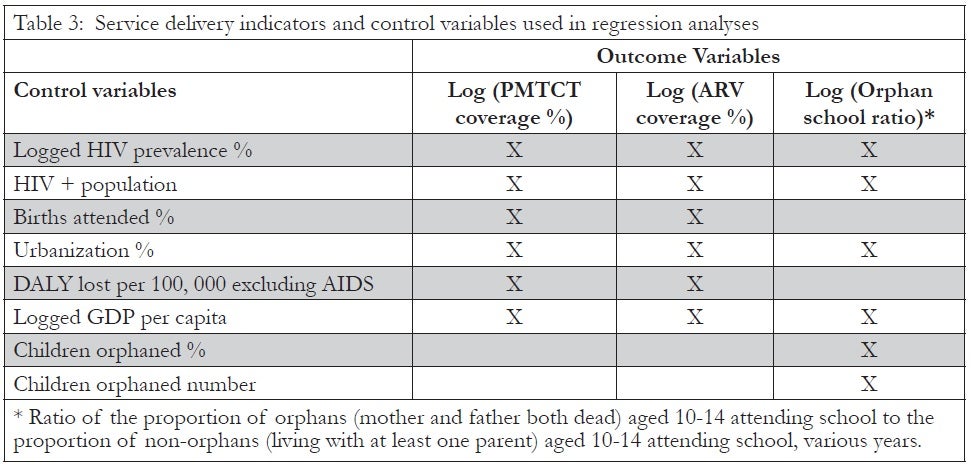

The three set of regressions were estimated with STATA version 8 using Ordinary Least Squares (OLS).20 The variables incorporated in the regression analyses are identified in Table 3 (see below). We do not report the specific results of our regression estimates because the objective of the analyses was not to test specific hypotheses about the structural determinants of service delivery or to estimate the magnitude of those effects. Rather, our central task was to assess from a series of model specifications the extent to which cases consistently performed better, worse, or generally as one would expect given the performance of countries with similar background conditions.

It is important to recall that the purpose of the analysis is not to explain all of the cross-country variation in these AIDS-related indicators, but simply to identify the extent to which certain structural factors explained cross-country variance. The remaining variance is what is of concern to us, and this might be explainable by more proximate social, political, and economic factors. The omitted variable bias that results from this approach is actually to some extent desired, as the impact, at least of uncorrelated omissions, is in the error term and shapes the ranking. The impact of correlated omissions is however included in the regression and so not in the ranking.21

Since our approach is to rate countries based on performance relative to other countries, separate regressions were estimated for each country, excluding the country being scored from the estimation process. The results were then used to predict the expected coverage for the excluded country and from that, the score. As a summary of the models, a single regression for each component including all countries is included in an annex to this paper.22

Unlike standard regression analyses that seek to explain with a set of independent variables as much variance as possible in a dependent variable, we do not use the standard measures of “goodness of fit” (i.e., p-values as measures of statistical significance) as criteria for evaluating our analysis. Rather, more akin to the use of ratio variables, we assert that the background variables need to be taken into account. But as contrasted with a ratio variable approach that would fully transform a variable based on a contextual factor, the regression approach allows us to make more incremental adjustments. The degree to which background factors shape our ranking depends upon their average effect, irrespective of goodness of fit. A weak average effect, evidenced by a substantively small regression coefficient, simply implied that for this set of observations a particular background factor had little substantive impact on the adjusted ranking relative to the unadjusted (raw) scores. In future analyses such factors could prove more influential.

A number of decisions made in the development of the method are debatable and in recognition of this a sensitivity analysis was conducted. The results of this are included in a detailed annex, available online.23

Results

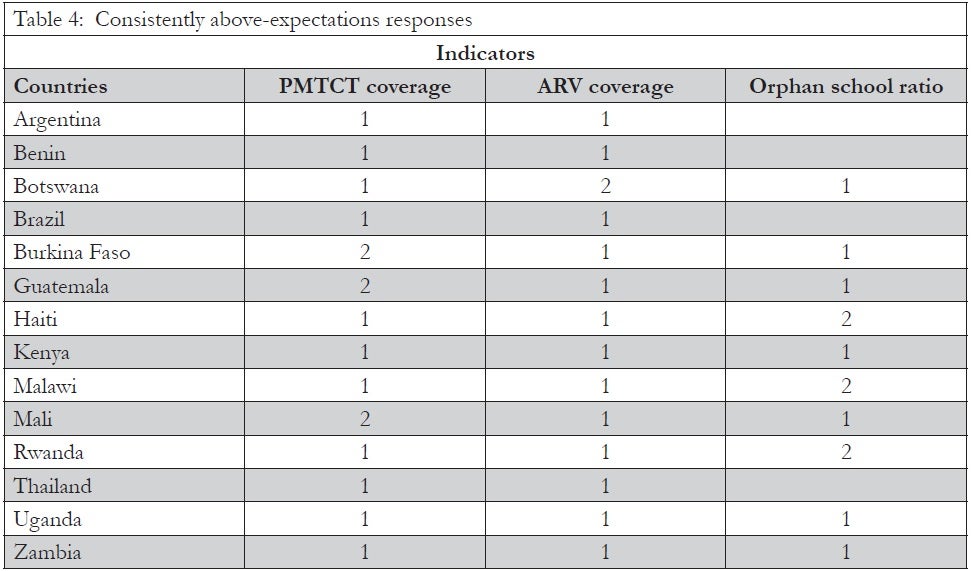

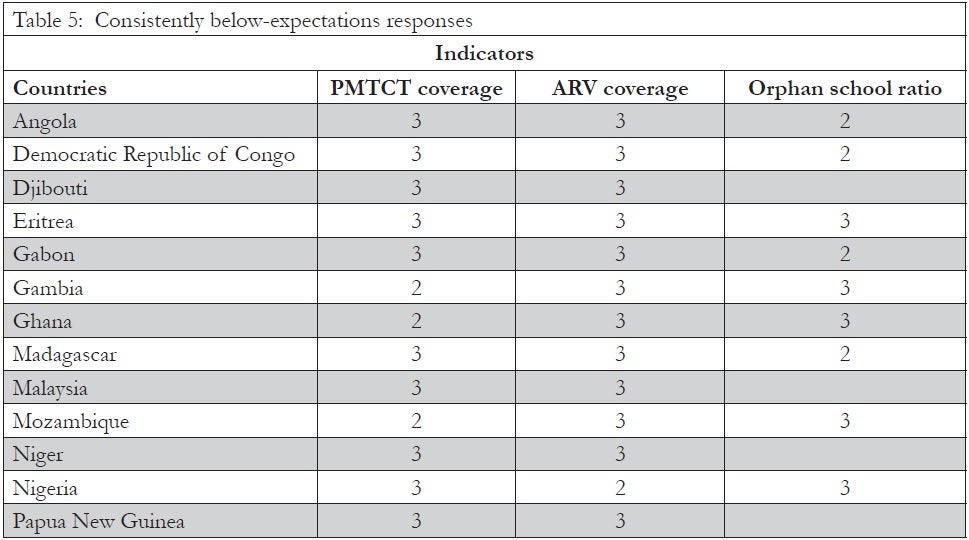

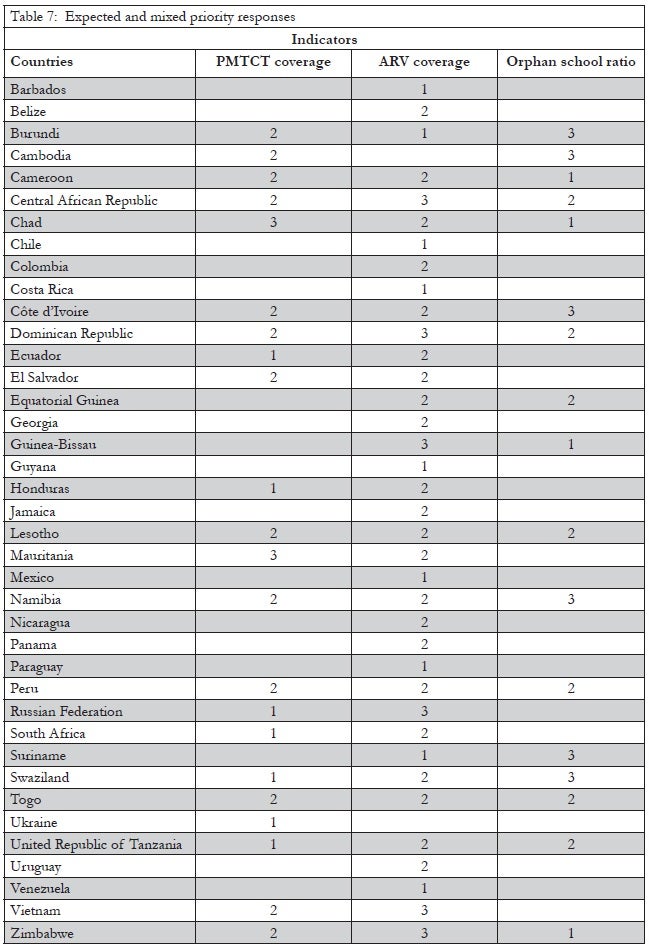

In Tables 4 through 7, we report the results of our analyses, controlling for background factors. In these tables, placement in the above-expectations group is indicated by a 1, meeting-expectations by a 2 and below-expectations by a 3. To assess how our analyses change relative rankings based on raw coverage data, these results should be compared with the unadjusted “leader boards” presented in Table 2.

The contextualized analysis leads to significant changes in the identification of several leaders and laggards in policy responsiveness. For example, based on raw scores alone, several countries can be identified as having among the best results for two or three of the components, including Argentina, Botswana, Brazil, Namibia, Rwanda and Thailand. These countries provide examples of relatively substantial responses but they are not always the greatest overachievers when one considers background conditions.

Table 4 presents results for each country that attained the top group (an above-expectations score, or 1) in at least two components, even after controlling for background factors (see Table 4 below).

Table 4 does not necessarily list the countries with the highest coverage but rather those that, according to the analysis, are consistently delivering relatively high service coverage in responding to HIV and AIDS. A number of the countries were placed in the above-expectations group in all three components. Of note, no countries in the above-expectations group for two components were found to be in the bottom group for the remaining component, revealing the generalized quality of AIDS-related service delivery.

A number of the countries generally associated with strong responses, such as Brazil, Thailand, and Uganda, are included. Botswana ranks 2 in the treatment component only because of the high standard against which the country was measured (given its generally high level of development relative to other countries in the sample). Although Rwanda is not a country that has historically been associated with a strong response, it now appears to be placing a high priority on responding. While we decided not to report country ranking given the nature of the method, Rwanda placed first in both the treatment and prevention components by some distance and this outcome holds with sensitivity analysis. The Rwandan response has certainly exceeded expectations.

Table 5 lists those countries where the response appears to be relatively poor. These are the countries that placed below-expectations in at least two components (see Table 5 below).

Despite below-expectations treatment results for the countries in Table 5, some service coverage was observed in most cases. Only nine of the 72 countries examined reported ARV coverage of less than 10% and only two reported 1% (Madagascar and Sudan). For the prevention component, the bottom group is largely made up of countries where there is no meaningful PMTCT intervention. Twelve of the 55 countries examined reported only 1% coverage.

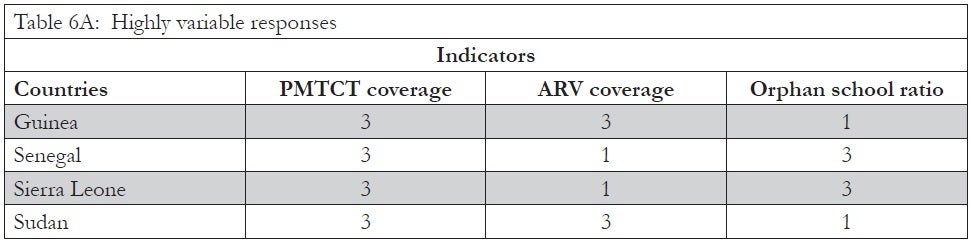

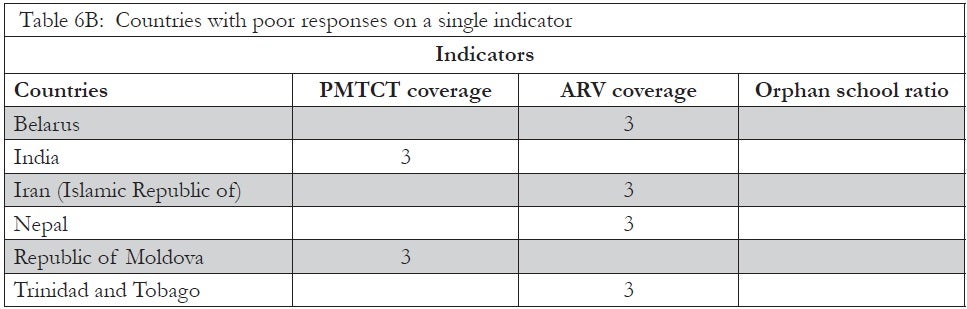

Among the set of countries identified as having performed below expectations in two components, there was some variability in terms of performance on a third component. Within this group, those identified as performing as expected or below expectations for the third component were placed in Table 5 for consistently poor performers. Alternatively, those countries that exceeded expectations for a third component were placed in Table 6A, which identifies cases of highly variable responses. Table 6B identifies countries for which we had valid data to conduct analysis only for a single component, but for that component identified a below-expectation score (see Tables 6A and 6B below). These countries ought to be held accountable for both their weak service delivery and the weak monitoring of key services, which may simply reflect the lack of service provision.

Senegal is often considered a success story, an example of a country that has managed to keep prevalence down, but is included in Table 6A because of poor performance in relation to PMTCT and orphan schooling. This classification as a highly variable response should, however, be considered carefully. If we had been able to find an indicator that reflected other aspects of prevention and used that in place of PMTCT, it is likely that the country would have placed in the above-expectations group for this and the ARV component, placing the country in the high-performing group overall. It is important to keep Senegal’s success in other areas of prevention in mind, but in terms of PMTCT and orphan schooling the response, with reported PMTCT coverage of only 1% and a schooling ratio of 0.74, does not compare well. This result suggests the need for caution in cross-country comparisons. In low-prevalence regions, coverage of programs for sexually transmitted infections or targeted interventions for specific risk groups might have better reflected the specific strengths and weaknesses of country responses.

Finally, Table 7 lists the countries that had more mixed results, some evidence of “meeting” expectations with missing data (see Table 7 below). The school ratio data are from specific international surveys; national governments are not necessarily at fault if such results are not available. The treatment and prevention indicators were expected to be reported as part of the UNGASS monitoring system. For this reason, countries that may have been placed in the above-expectations group for the one component that data were available were essentially “penalized” in our classification scheme for the failure to report data for other components.

Discussion

The results highlight the variability in service delivery related to the AIDS epidemic in the sample of countries examined. A number of countries that were already identifiable in the top-15 raw score rankings also appeared in the “above-expectations group” for that component, even after adjusting for background factors. However, we do observe some important differences in relative rankings in the context of background factors as compared with the unadjusted format.

In general, we believe that this approach provides better calibrated rankings for comparing countries, but not all scores appear to make sense, for a variety of reasons. Notably South Africa, traditionally seen as a poor performer, appears in the top group for PMTCT. Its placement raises three issues. First, this is a static analysis and does not consider past levels of response. South Africa now has relatively high coverage although this has only recently been achieved. Second, coverage across the sample is so poor that any large-scale program shifts countries into the top group, and even controlling for South Africa’s relatively much higher per capita income and health infrastructure does little to change this. Finally — and this is true for the other components — many expected certain countries to take the lead. This is not captured in this ranking, but countries like South Africa, Nigeria, and India are seen as regional leaders and should perhaps be held to a higher standard.

In the ARV treatment component, countries such as Rwanda, Mali, and Burkina Faso are found to be delivering higher than expected service coverage. This does not mean that they have the highest coverage rates. As seen in the raw data, Costa Rica and Botswana reported the highest coverage, at 95%. Rwanda reported coverage of 72%, and Mali and Burkina Faso much lower rates of 37% and 39%, respectively. They are reported in the top third because they were all expected to perform far worse than they did, given their low GDP per capita figures, their higher-than-average health burden from other causes, and their low level of health sector coverage. All three reported less than 35% urbanization and only Burkina Faso reported over 50% of births as being attended by skilled professionals.

Countries such as Botswana and Namibia, that have high coverage rates for treatment, are not listed as “over-achievers.” While doing commendably well, these countries have not exceeded expectations to the same degree. Both are relatively wealthy, have a lower-than-average health burden from other sources, good health infrastructure and, although prevalence is high, their small populations mean that their HIV positive populations are small. Given that both are high-prevalence countries and high-prevalence countries generally have better coverage, they too were expected to have such. Indeed, given such high expectations, it would not be possible for them to exceed expectations by as much as, for example, Rwanda.

For the orphan-schooling ratio, the above-expectations group includes all the countries from the unadjusted top fifteen except Equatorial Guinea, Togo, and the Dominican Republic. These countries did report ratios higher than or similar to Uganda and Kenya, which did not make it into the above-expectations group. This is because the Equatorial Guinea, Togo, and the Dominican Republic are wealthier than Uganda and Kenya in terms of per capita income and have lower orphaning rates; as a result, the expectation for these countries was slightly higher.

Overall the analysis highlights that some countries appear to be consistently strong in delivering AIDS-related services, and may stand as models to other countries facing similar structural contexts. The analysis has also highlighted consistent poor performers, countries which also require further examination and discussion. Failure to respond to HIV and AIDS results in deaths, and there is a great need to find ways to push for accountability in those countries where the response is poor.

Conclusions and next steps

In this paper, we have attempted to categorize countries in terms of their relative efforts in addressing the global AIDS pandemic. While any set of cross-country comparisons and ranking exercises is fraught with concerns about the reliability and validity of measurement strategies, as well as analytic adjustments or the lack thereof, the results presented here provide a useful portrait for identifying which governments should be held accountable to increase the response to AIDS. Ideally, over the longer term, all countries would be able to approach near-universal coverage and infection rates would be lower. But in the near term, relative rankings provide an empirical basis for supporting efforts to increase accountability.

The analysis presented in this paper has highlighted that responses in some countries have surpassed expectations, having done well in spite of difficult circumstances. The results suggest the need to examine not only the best known “success stories,” but also the responses that are impressive given the situation they are in. We may have more to learn from Burkina Faso than from Botswana. That said, our categorization of countries as doing relatively well should not be misinterpreted as suggesting that no further action would be useful. On the contrary, major gaps still exist for basic service coverage, let alone quality of coverage, and these still demand attention.

Countries that perform relatively badly need special attention, particularly those which perform badly across components. The present analysis weakens some common excuses: poor performers cannot easily argue that it is income, other health demands, their health system, or the size of the epidemic they face that leads to their categorization. The analysis suggests that such poor performances result from either relatively less effort on the part of the low-ranking countries or from efforts that are ineffective. Perhaps this analysis may support existing efforts to hold the leaders of these countries, and other actors, accountable.

Our investigative premise is that fruitful gains can be made from effective comparisons. Countries did not initially face the AIDS epidemic on a “level playing field,” and we believe that near-term assessments of progress ought to be tempered with some appreciation of background conditions. While this approach is an important initial step, we propose several additional steps going forward.

First, beyond static comparisons, countries need to be compared in terms of patterns of change. Each year, various stakeholders devote substantial efforts and energies toward fighting the spread and impact of the epidemic, and these efforts need to be measured and assessed.

Second, we require analysis of additional measures of AIDS service delivery and policy effort. Recognizing that our three indicators provide only a superficial portrait of what goes on in any single country, a much fuller body of evidence is needed to adequately rate and rank countries.

Finally, given the emphasis of these findings on the importance of background conditions, as well as analytic concerns with developing suitable metrics for making comparisons, alternatives to our regression approach ought to be explored. For example, sub-group comparisons for countries that share the most relevant background conditions might be conducted, to identify different types of metrics for different sets of countries. This seems logical since not all recommendations for action ought to apply equally to all countries. Under-achievement among poor, high-prevalence countries will imply different modes of accountability than will under-achievement among wealthier, high-prevalence countries.

Such research and analysis will be the subject of future efforts. In the meantime, the relative response approach can serve as a baseline for comparing countries in their responses to the AIDS pandemic, representing an advance over rankings based on raw service coverage data alone.

Acknowledgments

The authors would like to acknowledge that this project was initiated and supported by AIDS Accountability International as part of their ongoing accountability project. We would also like to acknowledge the financial support of the Swedish and Danish Ministries of Foreign Affairs. Thanks also to Wesley Oakes and Kate Meyerowits for their assistants with data collection and analysis and to all those who commented on earlier drafts.

Chris Desmond, PhD, is a research associate at the FXB Center for Health and Human Rights, Harvard School of Public Health, USA.

Evan Lieberman, PhD, is Associate Professor of Politics & Richard Stockton Bicentennial Preceptor, Princeton University, USA.

Anita Alban, PhD, is a health economist at EASE International, Copenhagen, Denmark.

Anna-Mia Ekström, MD, PhD, is an epidemiologist from Division of International Health, Dept. of Public Health, Karolinska Institutet & Dept. of Infectious Diseases, Karolinska University Hospital, site Huddinge, Stockholm, Sweden.

References

1. See, for example, the ranking of health systems in the World Health Organization, The World Health Report 2000: Health Systems: Improving Performance (Geneva: WHO, 2000). Available athttp://www.who.int/whr/2000/en/index.html.

2. UN, Declaration of Commitment on HIV/AIDS (New York: UN, 2001).

3. UNAIDS, Progress Report of the Global Response to the HIV/AIDS Epidemic 2004 (Geneva: WHO, 2004); USAID, UNAIDS, WHO and The Policy Project, The Level of Effort in the National Response to HIV/AIDS: The AIDS Program Effort Index 2003 Round (Washington, D. C.: USAID, 2003).

4. AIDS Accountability International: http://www.aidsaccountability.org.

5. UNAIDS, Report on the Global AIDS Epidemic: A UNAIDS 10th Anniversary Special Edition (Geneva: WHO, 2006).

6. Archival UNAIDS internet data on the Comoros as of October 17, 2007 available at: http://web.archive.org/web/20071018175254/http://unaids.org/en/Regions_Countries/Countries/comoros.asp.

7. E. Lieberman, “Ethnic Politics, Risk, and Policy-Making: A Cross-National Statistical Analysis of Government Responses to HIV/AIDS,” Comparative Political Studies 40/12 (2007), pp. 1407–1432; N. Nattrass, “What Determines Cross-Country Access to Antiretroviral Treatment?” Development Policy Review 3 (2006), pp. 321–337.

8. For example, if on average, democratic countries tended to perform better, they would still appear as better performers in our analyses. In a working paper she shared with us, Nattrass (see note 7) has also been developing a country rating based on a regression-residual approach. However, the work we have seen focuses only on ARV treatment, it interprets residuals as “leadership,” and incorporates a much longer list of control variables, including those that we have deliberately chosen to exclude. While we have opted for a different approach, hers provides a sensible alternative and it will be up to readers to evaluate the relative strengths and weaknesses of the two.

9. WHO, UNAIDS & UNICEF, Towards Universal Access: Scaling up Priority HIV/AIDS Interventions in the Health Sector (Geneva: WHO, 2007).

10. UNAIDS, (2006, see note 5).

11. WHO, World Health Report 2004: Changing History (Geneva: WHO, 2004).

12. WHO, WHO Database on Skilled Attendant at Delivery (Geneva: WHO, 2006). Available at: http://www.who.int//reproductive-health/global_monitoring/data.html.

13. E. Hertz, J. Herbert and J. Landon, “Social and Environmental Factors and Life Expectancy, Infant Mortality and Maternal Mortality Rates: Results of a Cross-National Comparison,” Social Science and Medicine 39 (1994), pp. 105–114; J. Robinson and H. Wharrad, “The Relationship Between Attendance at Birth and Maternal Mortality Rates: An Exploration of United Nations’ Data Sets Including the Ratio of Physicians and Nurses to Population, GNP per capita and Female Literacy,” Journal of Advanced Nursing 34 (2001), pp. 445–455; Lieberman (see note 7); Nattrass (see note 7).

14. Population Division, Department of Economic and Social Affairs, United Nations Secretariat, World Population Prospects: The 2004 Revision (New York: United Nations, 2005). Updated population Database is available at: http://esa.un.org/unpp/.

15. The World Bank Group, World Bank Development Indicators: Gross national income per capita 2007, Atlas method and PPP (Washington, D. C.: The World Bank, 2008), Available at:http://siteresources.worldbank.org/DATASTATISTICS/Resources/GNIPC.pdf.

16. Hertz et al. (see note 13); Robinson and Wharrad (see note 13).

17. UNAIDS (2006, see note 5); Lieberman (see note 7); Nattrass (see note 7).

18. WHO, UNAIDS & UNICEF (see note 9).

19. Demographic and Health Surveys. In: UNAIDS. Report on the Global AIDS Epidemic (see note 5).

20. STATA Version 8, Stata Corporation, Texas.

21. The nature of our analysis requires us to compare countries on a continuum, and yet certain countries had essentially zero service delivery, rendering them qualitatively different from the rest. As a result, countries that reported 1% or lower ARV or PMTCT coverage were excluded from the regression and included in the rankings as “under-achievers.”

22. The annex is available online at: http://hhrjournal.org/blog/wp-content/uploads/2008/12/relative-response-statistical-annex-hhr102.pdf.

23. The sensitivity analysis includes, among other things, dropping HIV prevalence so as not to hold higher prevalence countries to a higher standard, the inclusion of countries with very low coverage, and not taking the log of the dependent variable and so ranking based on absolute differences.